运行 MapReduce 样例_hadoop-mapreduce-examples-*.jar-程序员宅基地

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar

An example program must be given as the first argument.

Valid program names are:

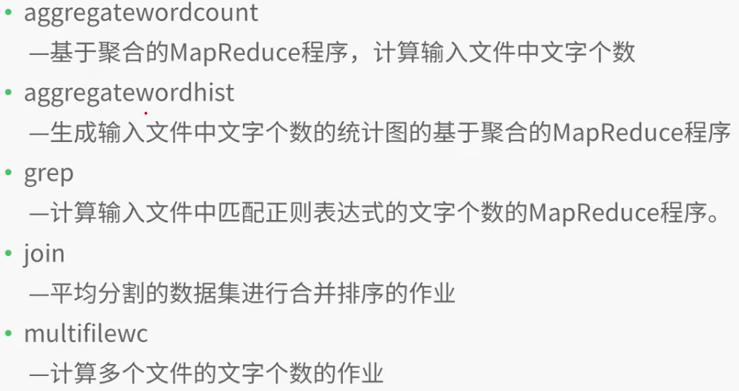

aggregatewordcount: An Aggregate based map/reduce program that counts the words in the input files.

aggregatewordhist: An Aggregate based map/reduce program that computes the histogram of the words in the input files.

bbp: A map/reduce program that uses Bailey-Borwein-Plouffe to compute exact digits of Pi.

dbcount: An example job that count the pageview counts from a database.

distbbp: A map/reduce program that uses a BBP-type formula to compute exact bits of Pi.

grep: A map/reduce program that counts the matches of a regex in the input.

join: A job that effects a join over sorted, equally partitioned datasets

multifilewc: A job that counts words from several files.

pentomino: A map/reduce tile laying program to find solutions to pentomino problems.

pi: A map/reduce program that estimates Pi using a quasi-Monte Carlo method.

randomtextwriter: A map/reduce program that writes 10GB of random textual data per node.

randomwriter: A map/reduce program that writes 10GB of random data per node.

secondarysort: An example defining a secondary sort to the reduce.

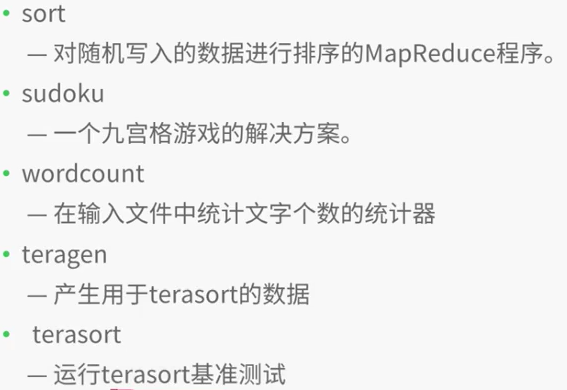

sort: A map/reduce program that sorts the data written by the random writer.

sudoku: A sudoku solver.

teragen: Generate data for the terasort

terasort: Run the terasort

teravalidate: Checking results of terasort

wordcount: A map/reduce program that counts the words in the input files.

wordmean: A map/reduce program that counts the average length of the words in the input files.

wordmedian: A map/reduce program that counts the median length of the words in the input files.

wordstandarddeviation: A map/reduce program that counts the standard deviation of the length of the words in the input files.

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar wordcount

Usage: wordcount <in> [<in>...] <out>

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar pi

Usage: org.apache.hadoop.examples.QuasiMonteCarlo <nMaps> <nSamples>

Generic options supported are

-conf <configuration file> specify an application configuration file

-D <property=value> use value for given property

-fs <local|namenode:port> specify a namenode

-jt <local|resourcemanager:port> specify a ResourceManager

-files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster

-libjars <comma separated list of jars> specify comma separated jar files to include in the classpath.

-archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines.

The general command line syntax is

bin/hadoop command [genericOptions] [commandOptions][root@master hadoop-2.7.4]# jps

4912 NameNode

9265 NodeManager

9155 ResourceManager

9561 Jps

5195 SecondaryNameNode

5038 DataNode

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar wordcount /input /output2

17/12/17 16:28:33 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/12/17 16:28:35 INFO input.FileInputFormat: Total input paths to process : 1

17/12/17 16:28:35 INFO mapreduce.JobSubmitter: number of splits:1

17/12/17 16:28:35 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1513499297109_0001

17/12/17 16:28:36 INFO impl.YarnClientImpl: Submitted application application_1513499297109_0001

17/12/17 16:28:37 INFO mapreduce.Job: The url to track the job: http://centos:8088/proxy/application_1513499297109_0001/

17/12/17 16:28:37 INFO mapreduce.Job: Running job: job_1513499297109_0001

17/12/17 16:29:06 INFO mapreduce.Job: Job job_1513499297109_0001 running in uber mode : false

17/12/17 16:29:06 INFO mapreduce.Job: map 0% reduce 0%

17/12/17 16:29:25 INFO mapreduce.Job: map 100% reduce 0%

17/12/17 16:29:40 INFO mapreduce.Job: map 100% reduce 100%

17/12/17 16:29:41 INFO mapreduce.Job: Job job_1513499297109_0001 completed successfully

17/12/17 16:29:42 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=339

FILE: Number of bytes written=242217

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=267

HDFS: Number of bytes written=217

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=16910

Total time spent by all reduces in occupied slots (ms)=9673

Total time spent by all map tasks (ms)=16910

Total time spent by all reduce tasks (ms)=9673

Total vcore-milliseconds taken by all map tasks=16910

Total vcore-milliseconds taken by all reduce tasks=9673

Total megabyte-milliseconds taken by all map tasks=17315840

Total megabyte-milliseconds taken by all reduce tasks=9905152

Map-Reduce Framework

Map input records=4

Map output records=31

Map output bytes=295

Map output materialized bytes=339

Input split bytes=95

Combine input records=31

Combine output records=29

Reduce input groups=29

Reduce shuffle bytes=339

Reduce input records=29

Reduce output records=29

Spilled Records=58

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=166

CPU time spent (ms)=1380

Physical memory (bytes) snapshot=279044096

Virtual memory (bytes) snapshot=4160716800

Total committed heap usage (bytes)=138969088

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=172

File Output Format Counters

Bytes Written=217

[root@master hadoop-2.7.4]# ./bin/hdfs dfs -ls /output2/

Found 2 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 16:29 /output2/_SUCCESS

-rw-r--r-- 1 root supergroup 217 2017-12-17 16:29 /output2/part-r-00000

[root@master hadoop-2.7.4]# ./bin/hdfs dfs -cat /output2/part-r-00000

78 1

ai 1

daokc 1

dfksdhlsd 1

dkhgf 1

docke 1

docker 1

erhejd 1

fdjk 1

fdskre 1

fjdk 1

fjdks 1

fjksl 1

fsd 1

go 1

haddop 1

hello 3

hi 1

hki 1

jfdk 1

scalw 1

sd 1

sdkf 1

sdkfj 1

sdl 1

sstem 1

woekd 1

yfdskt 1

yuihej 1

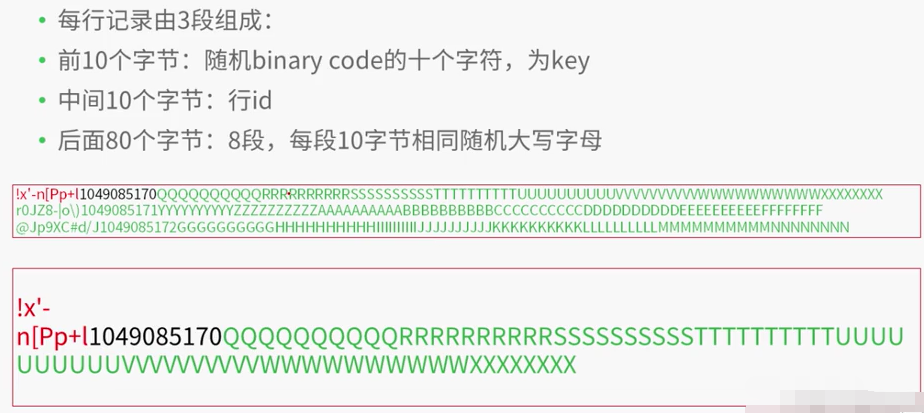

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar teragen

teragen <num rows> <output dir>

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar teragen 10000 /teragen

17/12/17 16:36:48 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/12/17 16:36:49 INFO terasort.TeraSort: Generating 10000 using 2

17/12/17 16:36:50 INFO mapreduce.JobSubmitter: number of splits:2

17/12/17 16:36:50 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1513499297109_0002

17/12/17 16:36:50 INFO impl.YarnClientImpl: Submitted application application_1513499297109_0002

17/12/17 16:36:50 INFO mapreduce.Job: The url to track the job: http://centos:8088/proxy/application_1513499297109_0002/

17/12/17 16:36:50 INFO mapreduce.Job: Running job: job_1513499297109_0002

17/12/17 16:37:01 INFO mapreduce.Job: Job job_1513499297109_0002 running in uber mode : false

17/12/17 16:37:01 INFO mapreduce.Job: map 0% reduce 0%

17/12/17 16:37:19 INFO mapreduce.Job: map 100% reduce 0%

17/12/17 16:37:21 INFO mapreduce.Job: Job job_1513499297109_0002 completed successfully

17/12/17 16:37:21 INFO mapreduce.Job: Counters: 31

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=240922

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=164

HDFS: Number of bytes written=1000000

HDFS: Number of read operations=8

HDFS: Number of large read operations=0

HDFS: Number of write operations=4

Job Counters

Launched map tasks=2

Other local map tasks=2

Total time spent by all maps in occupied slots (ms)=30146

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=30146

Total vcore-milliseconds taken by all map tasks=30146

Total megabyte-milliseconds taken by all map tasks=30869504

Map-Reduce Framework

Map input records=10000

Map output records=10000

Input split bytes=164

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=434

CPU time spent (ms)=1400

Physical memory (bytes) snapshot=161800192

Virtual memory (bytes) snapshot=4156805120

Total committed heap usage (bytes)=35074048

org.apache.hadoop.examples.terasort.TeraGen$Counters

CHECKSUM=21555350172850

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=1000000

[root@master hadoop-2.7.4]# ./bin/hdfs dfs -ls /teragen

Found 3 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 16:37 /teragen/_SUCCESS

-rw-r--r-- 1 root supergroup 500000 2017-12-17 16:37 /teragen/part-m-00000

-rw-r--r-- 1 root supergroup 500000 2017-12-17 16:37 /teragen/part-m-00001[root@centos hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar terasort /teragen /terasort

17/12/17 16:46:24 INFO terasort.TeraSort: starting

17/12/17 16:46:25 INFO input.FileInputFormat: Total input paths to process : 2

Spent 135ms computing base-splits.

Spent 3ms computing TeraScheduler splits.

Computing input splits took 139ms

Sampling 2 splits of 2

Making 1 from 10000 sampled records

Computing parititions took 384ms

Spent 530ms computing partitions.

17/12/17 16:46:26 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/12/17 16:46:27 INFO mapreduce.JobSubmitter: number of splits:2

17/12/17 16:46:27 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1513499297109_0003

17/12/17 16:46:28 INFO impl.YarnClientImpl: Submitted application application_1513499297109_0003

17/12/17 16:46:28 INFO mapreduce.Job: The url to track the job: http://centos:8088/proxy/application_1513499297109_0003/

17/12/17 16:46:28 INFO mapreduce.Job: Running job: job_1513499297109_0003

17/12/17 16:46:38 INFO mapreduce.Job: Job job_1513499297109_0003 running in uber mode : false

17/12/17 16:46:38 INFO mapreduce.Job: map 0% reduce 0%

17/12/17 16:47:19 INFO mapreduce.Job: map 100% reduce 0%

17/12/17 16:47:41 INFO mapreduce.Job: map 100% reduce 100%

17/12/17 16:47:44 INFO mapreduce.Job: Job job_1513499297109_0003 completed successfully

17/12/17 16:47:45 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=1040006

FILE: Number of bytes written=2445488

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1000208

HDFS: Number of bytes written=1000000

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=87622

Total time spent by all reduces in occupied slots (ms)=12795

Total time spent by all map tasks (ms)=87622

Total time spent by all reduce tasks (ms)=12795

Total vcore-milliseconds taken by all map tasks=87622

Total vcore-milliseconds taken by all reduce tasks=12795

Total megabyte-milliseconds taken by all map tasks=89724928

Total megabyte-milliseconds taken by all reduce tasks=13102080

Map-Reduce Framework

Map input records=10000

Map output records=10000

Map output bytes=1020000

Map output materialized bytes=1040012

Input split bytes=208

Combine input records=0

Combine output records=0

Reduce input groups=10000

Reduce shuffle bytes=1040012

Reduce input records=10000

Reduce output records=10000

Spilled Records=20000

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=3246

CPU time spent (ms)=3580

Physical memory (bytes) snapshot=400408576

Virtual memory (bytes) snapshot=6236995584

Total committed heap usage (bytes)=262987776

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1000000

File Output Format Counters

Bytes Written=1000000

17/12/17 16:47:45 INFO terasort.TeraSort: done

[root@centos hadoop-2.7.4]# ./bin/hdfs dfs -ls /terasort

Found 3 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 16:47 /terasort/_SUCCESS

-rw-r--r-- 10 root supergroup 0 2017-12-17 16:46 /terasort/_partition.lst

-rw-r--r-- 1 root supergroup 1000000 2017-12-17 16:47 /terasort/part-r-00000[root@centos hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar teravalidate /terasort /report

17/12/17 17:03:46 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/12/17 17:03:48 INFO input.FileInputFormat: Total input paths to process : 1

Spent 56ms computing base-splits.

Spent 3ms computing TeraScheduler splits.

17/12/17 17:03:48 INFO mapreduce.JobSubmitter: number of splits:1

17/12/17 17:03:49 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1513499297109_0007

17/12/17 17:03:49 INFO impl.YarnClientImpl: Submitted application application_1513499297109_0007

17/12/17 17:03:49 INFO mapreduce.Job: The url to track the job: http://centos:8088/proxy/application_1513499297109_0007/

17/12/17 17:03:49 INFO mapreduce.Job: Running job: job_1513499297109_0007

17/12/17 17:04:00 INFO mapreduce.Job: Job job_1513499297109_0007 running in uber mode : false

17/12/17 17:04:00 INFO mapreduce.Job: map 0% reduce 0%

17/12/17 17:04:08 INFO mapreduce.Job: map 100% reduce 0%

17/12/17 17:04:19 INFO mapreduce.Job: map 100% reduce 100%

17/12/17 17:04:20 INFO mapreduce.Job: Job job_1513499297109_0007 completed successfully

17/12/17 17:04:20 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=92

FILE: Number of bytes written=241805

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1000105

HDFS: Number of bytes written=22

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=4952

Total time spent by all reduces in occupied slots (ms)=8032

Total time spent by all map tasks (ms)=4952

Total time spent by all reduce tasks (ms)=8032

Total vcore-milliseconds taken by all map tasks=4952

Total vcore-milliseconds taken by all reduce tasks=8032

Total megabyte-milliseconds taken by all map tasks=5070848

Total megabyte-milliseconds taken by all reduce tasks=8224768

Map-Reduce Framework

Map input records=10000

Map output records=3

Map output bytes=80

Map output materialized bytes=92

Input split bytes=105

Combine input records=0

Combine output records=0

Reduce input groups=3

Reduce shuffle bytes=92

Reduce input records=3

Reduce output records=1

Spilled Records=6

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=193

CPU time spent (ms)=1250

Physical memory (bytes) snapshot=281731072

Virtual memory (bytes) snapshot=4160716800

Total committed heap usage (bytes)=139284480

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1000000

File Output Format Counters

Bytes Written=22

[root@centos hadoop-2.7.4]# ./bin/hdfs dfs -ls /report

Found 2 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 17:04 /report/_SUCCESS

-rw-r--r-- 1 root supergroup 22 2017-12-17 17:04 /report/part-r-00000

[root@centos hadoop-2.7.4]# ./bin/hdfs dfs -cat /report/part-r-00000

checksum 139abefd74b2

智能推荐

稀疏编码的数学基础与理论分析-程序员宅基地

文章浏览阅读290次,点赞8次,收藏10次。1.背景介绍稀疏编码是一种用于处理稀疏数据的编码技术,其主要应用于信息传输、存储和处理等领域。稀疏数据是指数据中大部分元素为零或近似于零的数据,例如文本、图像、音频、视频等。稀疏编码的核心思想是将稀疏数据表示为非零元素和它们对应的位置信息,从而减少存储空间和计算复杂度。稀疏编码的研究起源于1990年代,随着大数据时代的到来,稀疏编码技术的应用范围和影响力不断扩大。目前,稀疏编码已经成为计算...

EasyGBS国标流媒体服务器GB28181国标方案安装使用文档-程序员宅基地

文章浏览阅读217次。EasyGBS - GB28181 国标方案安装使用文档下载安装包下载,正式使用需商业授权, 功能一致在线演示在线API架构图EasySIPCMSSIP 中心信令服务, 单节点, 自带一个 Redis Server, 随 EasySIPCMS 自启动, 不需要手动运行EasySIPSMSSIP 流媒体服务, 根..._easygbs-windows-2.6.0-23042316使用文档

【Web】记录巅峰极客2023 BabyURL题目复现——Jackson原生链_原生jackson 反序列化链子-程序员宅基地

文章浏览阅读1.2k次,点赞27次,收藏7次。2023巅峰极客 BabyURL之前AliyunCTF Bypassit I这题考查了这样一条链子:其实就是Jackson的原生反序列化利用今天复现的这题也是大同小异,一起来整一下。_原生jackson 反序列化链子

一文搞懂SpringCloud,详解干货,做好笔记_spring cloud-程序员宅基地

文章浏览阅读734次,点赞9次,收藏7次。微服务架构简单的说就是将单体应用进一步拆分,拆分成更小的服务,每个服务都是一个可以独立运行的项目。这么多小服务,如何管理他们?(服务治理 注册中心[服务注册 发现 剔除])这么多小服务,他们之间如何通讯?这么多小服务,客户端怎么访问他们?(网关)这么多小服务,一旦出现问题了,应该如何自处理?(容错)这么多小服务,一旦出现问题了,应该如何排错?(链路追踪)对于上面的问题,是任何一个微服务设计者都不能绕过去的,因此大部分的微服务产品都针对每一个问题提供了相应的组件来解决它们。_spring cloud

Js实现图片点击切换与轮播-程序员宅基地

文章浏览阅读5.9k次,点赞6次,收藏20次。Js实现图片点击切换与轮播图片点击切换<!DOCTYPE html><html> <head> <meta charset="UTF-8"> <title></title> <script type="text/ja..._点击图片进行轮播图切换

tensorflow-gpu版本安装教程(过程详细)_tensorflow gpu版本安装-程序员宅基地

文章浏览阅读10w+次,点赞245次,收藏1.5k次。在开始安装前,如果你的电脑装过tensorflow,请先把他们卸载干净,包括依赖的包(tensorflow-estimator、tensorboard、tensorflow、keras-applications、keras-preprocessing),不然后续安装了tensorflow-gpu可能会出现找不到cuda的问题。cuda、cudnn。..._tensorflow gpu版本安装

随便推点

物联网时代 权限滥用漏洞的攻击及防御-程序员宅基地

文章浏览阅读243次。0x00 简介权限滥用漏洞一般归类于逻辑问题,是指服务端功能开放过多或权限限制不严格,导致攻击者可以通过直接或间接调用的方式达到攻击效果。随着物联网时代的到来,这种漏洞已经屡见不鲜,各种漏洞组合利用也是千奇百怪、五花八门,这里总结漏洞是为了更好地应对和预防,如有不妥之处还请业内人士多多指教。0x01 背景2014年4月,在比特币飞涨的时代某网站曾经..._使用物联网漏洞的使用者

Visual Odometry and Depth Calculation--Epipolar Geometry--Direct Method--PnP_normalized plane coordinates-程序员宅基地

文章浏览阅读786次。A. Epipolar geometry and triangulationThe epipolar geometry mainly adopts the feature point method, such as SIFT, SURF and ORB, etc. to obtain the feature points corresponding to two frames of images. As shown in Figure 1, let the first image be and th_normalized plane coordinates

开放信息抽取(OIE)系统(三)-- 第二代开放信息抽取系统(人工规则, rule-based, 先抽取关系)_语义角色增强的关系抽取-程序员宅基地

文章浏览阅读708次,点赞2次,收藏3次。开放信息抽取(OIE)系统(三)-- 第二代开放信息抽取系统(人工规则, rule-based, 先关系再实体)一.第二代开放信息抽取系统背景 第一代开放信息抽取系统(Open Information Extraction, OIE, learning-based, 自学习, 先抽取实体)通常抽取大量冗余信息,为了消除这些冗余信息,诞生了第二代开放信息抽取系统。二.第二代开放信息抽取系统历史第二代开放信息抽取系统着眼于解决第一代系统的三大问题: 大量非信息性提取(即省略关键信息的提取)、_语义角色增强的关系抽取

10个顶尖响应式HTML5网页_html欢迎页面-程序员宅基地

文章浏览阅读1.1w次,点赞6次,收藏51次。快速完成网页设计,10个顶尖响应式HTML5网页模板助你一臂之力为了寻找一个优质的网页模板,网页设计师和开发者往往可能会花上大半天的时间。不过幸运的是,现在的网页设计师和开发人员已经开始共享HTML5,Bootstrap和CSS3中的免费网页模板资源。鉴于网站模板的灵活性和强大的功能,现在广大设计师和开发者对html5网站的实际需求日益增长。为了造福大众,Mockplus的小伙伴整理了2018年最..._html欢迎页面

计算机二级 考试科目,2018全国计算机等级考试调整,一、二级都增加了考试科目...-程序员宅基地

文章浏览阅读282次。原标题:2018全国计算机等级考试调整,一、二级都增加了考试科目全国计算机等级考试将于9月15-17日举行。在备考的最后冲刺阶段,小编为大家整理了今年新公布的全国计算机等级考试调整方案,希望对备考的小伙伴有所帮助,快随小编往下看吧!从2018年3月开始,全国计算机等级考试实施2018版考试大纲,并按新体系开考各个考试级别。具体调整内容如下:一、考试级别及科目1.一级新增“网络安全素质教育”科目(代..._计算机二级增报科目什么意思

conan简单使用_apt install conan-程序员宅基地

文章浏览阅读240次。conan简单使用。_apt install conan